Doing Our Best

Practices in Scientific Computing

Kathryn (Katy) Huff

HackIllinois, Urbana, IL, February 24, 2018

“Heavier-than-air flying machines are impossible” - Lord Kelvin, 1895

“ Organized Skepticism. Scientists are critical: All ideas must be tested and are subject to rigorous structured community scrutiny.” - R.K. Merton, 1942

“the first principle is that you must not fool yourself, and you are the easiest person to fool.” - R. Feynman, 1974

“I am thinking about something much more important than bombs. I am thinking about computers.” - John von Neumann, 1946.

Science

- builds and organizes knowledge

- tests explanations about the universe

- systematically,

- objectively,

- transparently,

- and reproducibly.

Otherwise it's not science.

Science relies on

- peer review,

- skepticism,

- transparency,

- attribution,

- accountability,

- collaboration,

- and impact.

Since 6th century BCE, science has been perfecting these tenents.

Open source software is now superior at all of them.

Computers

should...

- improve efficiency,

- reduce human error,

- automate the mundane,

- simplify the complex,

- and accelerate research.

But scientists aren't trained to use them effectively.

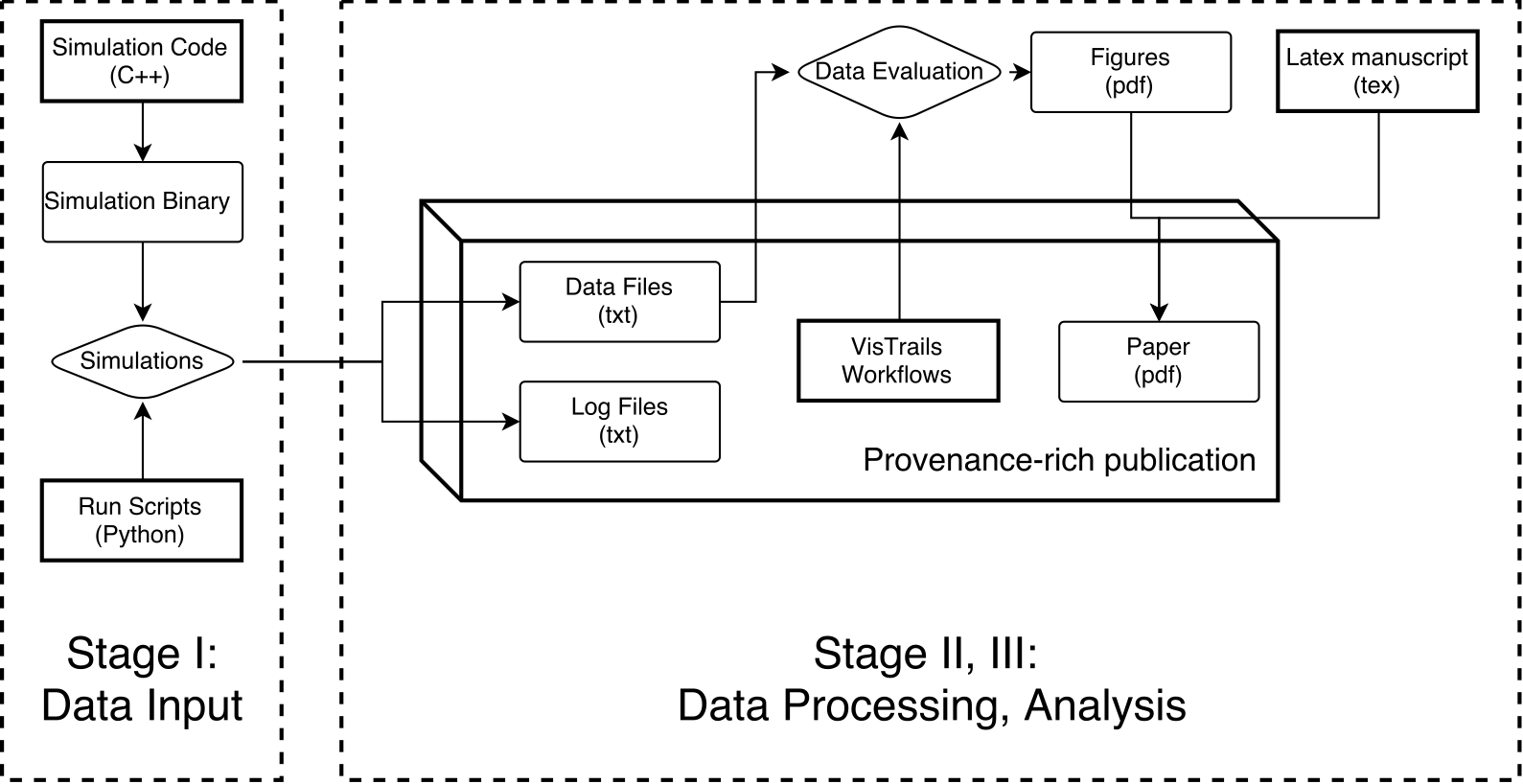

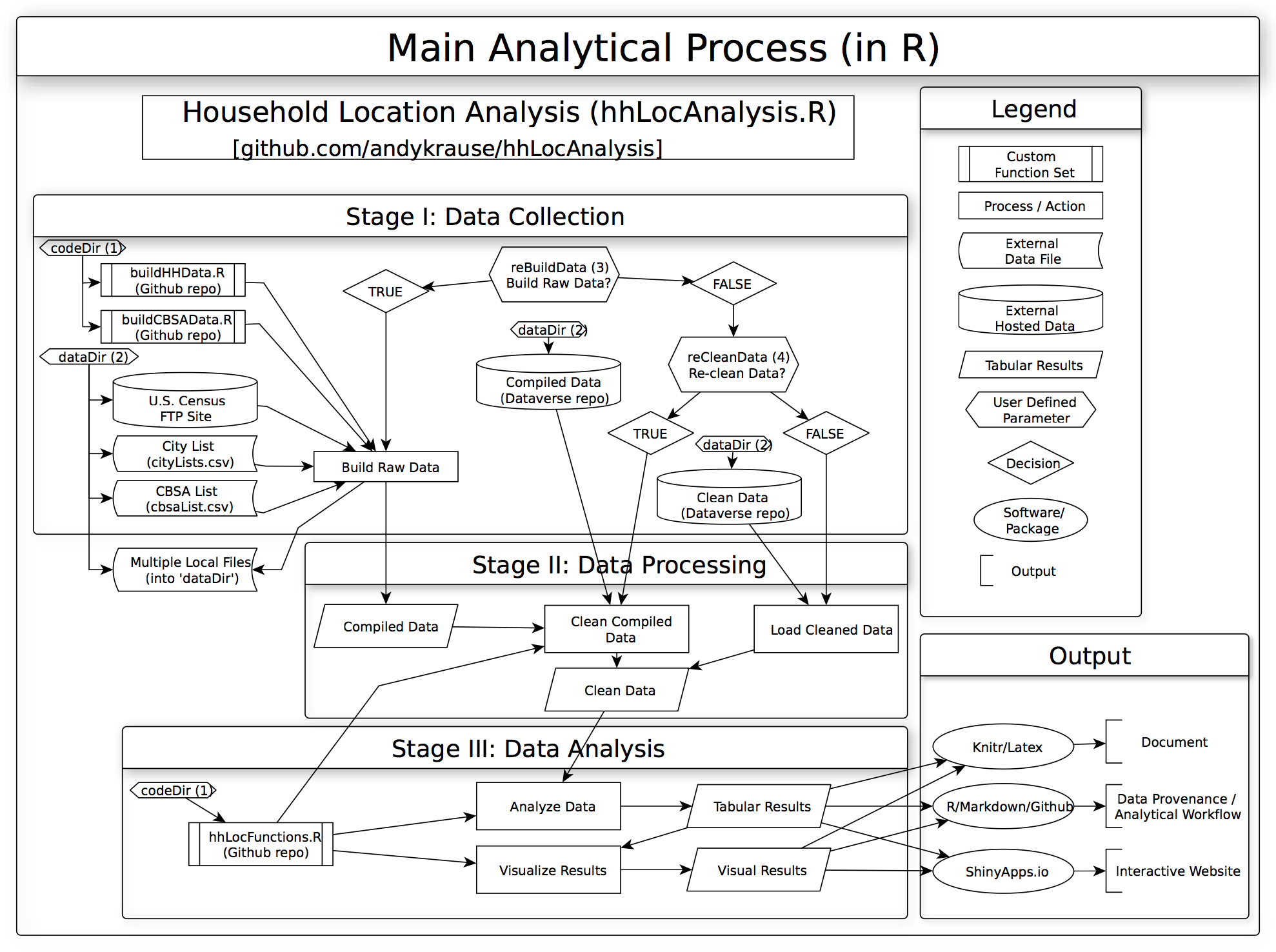

“ Computational science is a special case of scientific research: the work is easily shared via the Internet since the paper, code, and data are digital and those three aspects are all that is required to reproduce the results, given sufficient computation tools. ” - Stodden, 2010.

Getting Started

“ Organized Skepticism. Scientists are critical: All ideas must be tested and are subject to rigorous structured community scrutiny.” - R.K. Merton, 1942

Data Storage

- Good: pencil and paper

- Better: spreadsheet

- Best: standardized file format, database management system

Formats: Evaluated Nuclear Data File (ENDF), Evaluated Nuclear Structure Data File (ENSDF), Hierarchical Data Format (HDF), etc.

Management: C/Python/Fortran APIs, SQL, MySQL, MongoDB, etc.

Backing Up Files

- Good: hope

- Better: nightly emails

- Best: remote version control

Version Control Systems: cvs, svn, hg, git

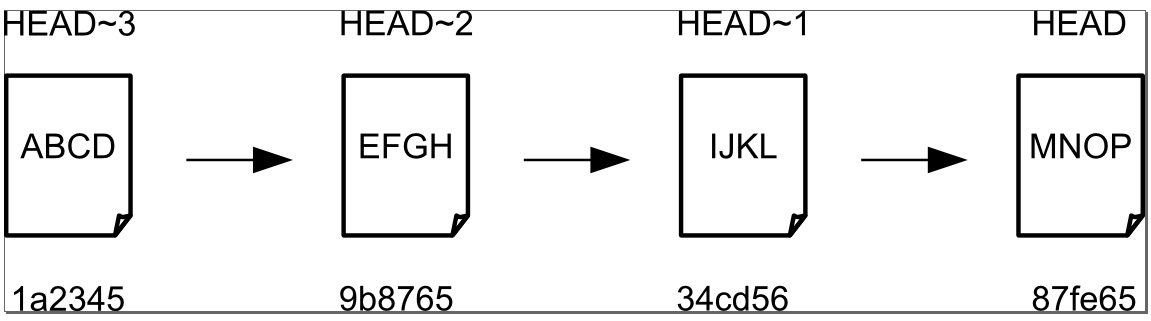

Managing Changes

- Good: naming convention

- Better: clever naming convention

- Best: local version control

Getting It Done

“ It takes just as much time to write a good paper as it takes to write a bad one. ” - Polterovich, 2014

Analysis

- Good: pencil and calculator

- Better: spreadsheets, matlab, mathematica

- Best: scripting, open source libraries, modern programming language

Hint: Python, scipy, numpy, numba, pandas, scikit-learn, scikit-image, etc.

Multiple File Cleanup

- Good: manually edit every file

- Better: search and replace in each file

- Best: scripted batch editing

Hint: try a tutorial on BASH, CSH, Python, or Perl.

Excecuting Workflows

- Good: retype a series of commands

- Better: bash script

- Best: build system

Build System Tools: make, autoconf, automake, cmake, etc.

Data Structures

- Good: 100 string variables holding doubles

- Better: lists of lists of doubles

- Best: appropriate powerful data structures

Hint: In FORTAN, learn about arrays. In C++, learn about maps, vectors, deques, queues, etc. In python, the power lies in dictionaries and numpy arrays.

API Design

- Good: single block of procedural code

- Better: separate functions

- Best: small, testable functions, grouped into classes, DRY

DRY: Dont Repeat Yourself. Code replication is bug proliferation.

Variable Naming

- Good: d1, d2, d3

- Better: x, y, z

- Best: p.x, p.y, p.z, p=Point(x,y,z)

File I/O

- Good: none, hardcoded variables

- Better: plain text input file, line-by-line homemade string parsing

- Best: file parsing library

Tools: python argparse, xml rng, etc.

Getting It Right

“ The scientific method’s central motivation is the ubiquity of error—the awareness that mistakes and self-delusion can creep in absolutely anywhere and that the scientist’s effort is primarily expended in recognizing and rooting out error. ” - Donoho, 2009.

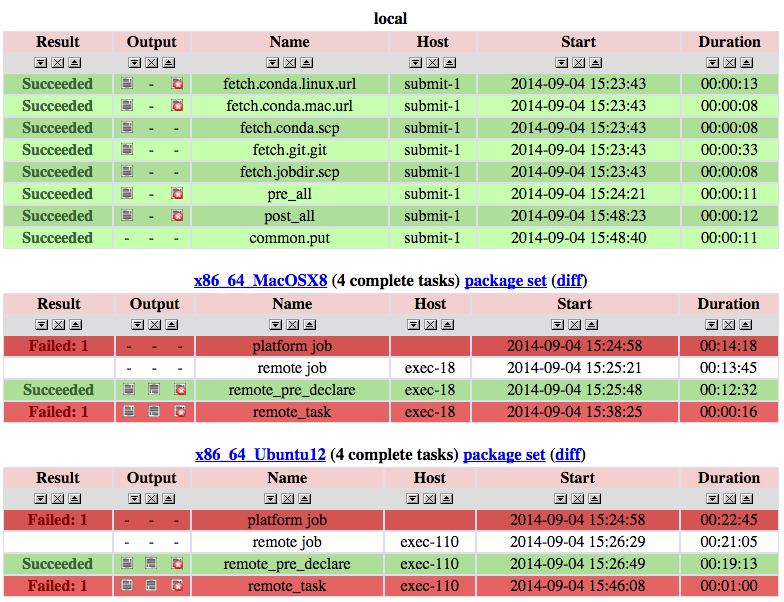

Error Detection

- Good: show results to experts

- Better: integration testing

- Best: unit test suite, continuous integration

Error Diagnostics

- Good: re-re-read the code

- Better: print statements

- Best: use a linter, a debugger, and a profiler

Tools: cpplint, pyflakes, gdb, lldb, pdb, idb, valgrind, kernprof, kcachegrind

Error Correction

- Good: fix code

- Better: fix, add an exception

- Best: fix, add an exception, add a test

Getting It Together

“ Two of the biggest challenges scientists and other programmers face when working with code and data are keeping track of changes (and being able to revert them if things go wrong), and collaborating on a program or dataset. ” - Wilson, et al. 2014.Merging Collaborative Work

- Good: single master copy, waiting

- Better: emails and patches

- Best: remote version control

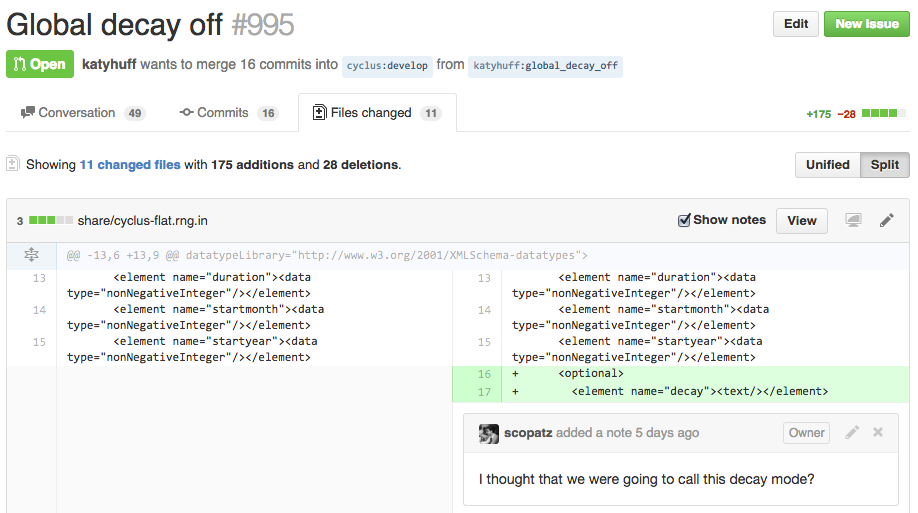

Peer Review For Code

- Good: separation of concerns

- Better: shared repository

- Best: peer-reviewed pull requests

“ just-in-time review of small code changes is more likely to succeed than large-scale end-of-work reviews. ” - Petre, Wilson 2014

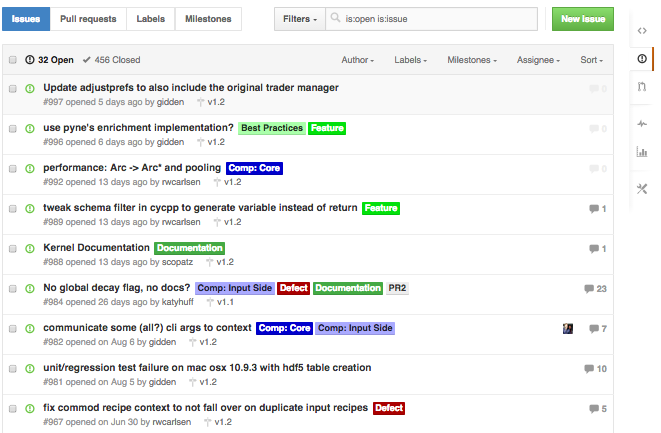

Teamwork

- Good: weekly research meetings, year-long tasks

- Better: daily conversations, month-long goals

- Best: pair programming, issue tracking

Software Handovers

- Good: zip file, theory paper

- Better: comments in code, example input file

- Best: automated documentation, test suite

Books: Clean Code, Working Effectively with Legacy Code

Tools: sphinx, doxygen, gooletest, unitttest, nosetests

Getting It Out There

“ If a piece of scientific software is released in the forest, does it change the field? ”

Plotting

- Good: custom formatting, clickable GUI

- Better: plot format templates (excel, mathematica)

- Best: scripted plotting, matplotlib, gnuplot, etc.

Writing

- Good: stone tablet, microsoft word

- Better: word with track changes, open office

- Best: plain text markup with version control and a makefile

Tools: LaTeX, markdown, restructured text

Distribution Control

- Good: "email to request access"

- Better: license file

- Best: license file, citation file, DOI, forkable repository

Example: github.com/cyclus

Community Adoption

- Good: none, internal use only

- Better: online repository, developer email online

- Best: issue tracker, user/developer listhost(s), online documentation

Unique Issue in Nuclear Engineering

Export control is serious.

Write programs for people, not computers.

- A program should not require its readers to hold more than a handful of facts in memory at once.

- Make names consistent, distinctive, and meaningful.

- Make code style and formatting consistent.

Let the computer do the work.

- Make the computer repeat tasks.

- Save recent commands in a file for re-use.

- Use a build tool to automate workflows.

Make incremental changes.

- Work in small steps with frequent feedback and course correction.

Use a version control system.

- Put everything that has been created manually in version control.

Don't repeat yourself (or others).

- Every piece of data must have a single authoritative representation in the system.

- Modularize code rather than copying and pasting.

- Re-use code instead of rewriting it.

Plan for mistakes.

- Add assertions to programs to check their operation.

- Use an off-the-shelf unit testing library.

- Turn bugs into test cases.

- Use a symbolic debugger.

Optimize software only after it works correctly.

- Use a profiler to identify bottlenecks.

- Write code in the highest-level language possible.

Document design and purpose, not mechanics.

- Document interfaces and reasons, not implementations.

- Refactor code in preference to explaining how it works.

- Embed the documentation for a piece of software in that software.

Collaborate.

- Use pre-merge code reviews.

- Use pair programming when bringing someone new up to speed and when tackling particularly tricky problems.

- Use an issue tracking tool.

''Reading brings us unknown friends'' - Honore de Balzac

- BIDS

- Justin Kitzes

- Fatma Imamoglu

- Daniel Turek

- Ben Marwick

- Chapter Authors

- Case Study Authors

- Reproducibility Working Group

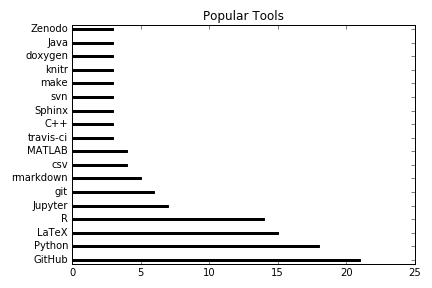

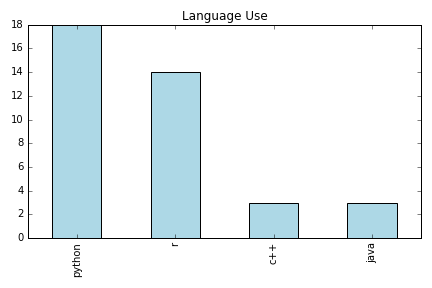

Reproducibility and Open Science Conference

May 21&22, 2015

- Three days

- Invitation Only

- Case Studies, Education, Self-assessment

- https://github.com/BIDS/repro-conf

- Incentives

- Pain Points

- Recommmendations from the Authors

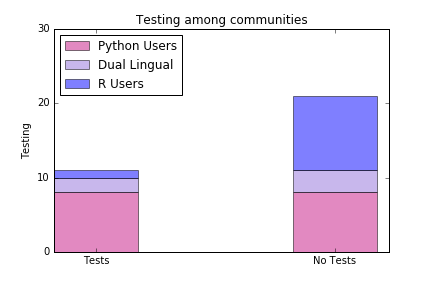

- A Little Data

- Needs

Incentives

- verifiability

- collaboration

- efficiency

- extensibility

- "focus on science"

- "forced planning"

- "safety for evolution"

Pain Points

- People and Skills

- Dependencies, Build Systems, and Packaging

- Hardware Access

- Testing

- Publishing

- Data Versioning

- Time and Incentives

- Data restrictions

Recommendations

- version control your code

- open your data

- automate everywhere possible

- document your processes

- test everything

- use free and open tools

Recommendations: Continued

- avoid excessive dependencies

- or at least package their installation

- host code on a collaborative platform (e.g. GitHub)

- get DOIs for data and code

- plain text data is preferred, timeless

- explicitly set seeds

- workflow frameworks can be overkill

Emergent Needs

- Common demoninator tools should support reproducibility

- Improved configuration and build systems

- Reproducibility at scale for HPC

- Standardized hardware configurations limited-availability experimental apparatuses.

- Better understanding of incentives for unit testing.

- Greater adoption of unit testing irrespective of programming language.

- Broader community adoption around publication formats that allow parallel editing

- Broader adoption of data storage, versioning, and management tools.

- Increased community recognition of the benefits of reproducibility.

- Incentive systems where reproducibility is not self-incentivizing.

- Standards around scrubbed and representational data

- Community adoption for file format standards within some domains.

- Domain standards which translate well outside of their own scientific communities.

Credit!

A lot of these thoughts came from my personal experience. However, much of it was annealed from conversations with colleagues throughout the scientific and computing communities (too many of you to name).

Resources

Ok, I'm convinced. So how can one learn this stuff?Online Resources

- Software Carpentry

- Data Carpentry

- Version Control: Github, Pro Git book

- Testing: nose, goolgetest

- Documentation: Sphinx, Doxygen

- Python Worker's Party

- The Hacker Within

Papers!

- Wilson, Greg, D. A. Aruliah, C. Titus Brown, Neil P. Chue Hong, Matt Davis, Richard T. Guy, Steven H. D. Haddock, Kathryn D. Huff, et al. 2014. “Best Practices for Scientific Computing.” PLoS Biol 12 (1): e1001745. doi:10.1371/journal.pbio.1001745.

- Wilson, Greg, Jennifer Bryan, Karen Cranston, Justin Kitzes, Lex Nederbragt, and Tracy K. Teal. 2016. “Good Enough Practices in Scientific Computing.” arXiv:1609.00037 [Cs], August. http://arxiv.org/abs/1609.00037.

- Scopatz, Anthony, and Kathryn D. Huff. 2015. Effective Computation in Physics. 1st edition. S.l.: O’Reilly Media.

- Blanton, Brian, and Chris Lenhardt. 2014. “A Scientist’s Perspective on Sustainable Scientific Software.” Journal of Open Research Software, Issues in Research Software, 2 (1): e17.

- Donoho, David L., Arian Maleki, Inam Ur Rahman, Morteza Shahram, and Victoria Stodden. 2009. “Reproducible Research in Computational Harmonic Analysis.” Computing in Science & Engineering 11 (1): 8–18. doi:10.1109/MCSE.2009.15.

- Goble, Carole. 2014. “Better Software, Better Research.” IEEE Internet Computing 18 (5): 4–8. doi:10.1109/MIC.2014.88.

- Hannay, J. E, C. MacLeod, J. Singer, H. P Langtangen, D. Pfahl, and G. Wilson. 2009. “How Do Scientists Develop and Use Scientific Software?” In Proceedings of the 2009 ICSE Workshop on Software Engineering for Computational Science and Engineering, 1–8.

- Joppa, L. N., G. McInerny, R. Harper, L. Salido, K. Takeda, K. O’Hara, D. Gavaghan, and S. Emmott. 2013. “Troubling Trends in Scientific Software Use.” Science 340 (6134): 814–15. doi:10.1126/science.1231535.

- Merali, Zeeya. 2010. “Computational Science: ...Error.” Nature 467 (7317): 775–77. doi:10.1038/467775a.

- Petre, Marian, and Greg Wilson. 2014. “Code Review For and By Scientists.” arXiv:1407.5648 [cs], July.

- Schossau, Jory, and Greg Wilson. 2014. “Which Sustainable Software Practices Do Scientists Find Most Useful?” arXiv:1407.6220 [cs], July.

- Stodden, Victoria. 2010. “The Scientific Method in Practice: Reproducibility in the Computational Sciences.” SSRN Electronic Journal. doi:10.2139/ssrn.1550193.

- Wicherts, Jelte M., Marjan Bakker, and Dylan Molenaar. 2011. “Willingness to Share Research Data Is Related to the Strength of the Evidence and the Quality of Reporting of Statistical Results.” PLoS ONE 6 (11): e26828. doi:10.1371/journal.pone.0026828.

Good Books

- Clean Code - Robert C. Martin

- Working Effectively with Legacy Code - Martin Fowler

- Effective Computation in Physics - Huff, Scopatz

THE END

Katy Huff

katyhuff.github.io/2018-02-24-hackillinois

Doing Our Best: Practices in Scientific Computing by Kathryn Huff is licensed under a Creative Commons Attribution 4.0 International License.

Based on a work at http://katyhuff.github.io/2018-02-24-hackillinois.